Two main tasks that any ASO practitioner (no matter what platform or product they work on) have to deal with are maximizing app store views (from search, featuring or any other channel) and ensure high views to install conversion rate.

As you may already understand from its name, this article’s main theme will be working on maximizing this CR level. Anyone who tried to improve app conversion numbers knows that ways to solve this problem for App Store and Google Play are different. And one of the biggest differences is that Google Play Console gives us an in-built instrument for A/B testing. With its help, we can decide which creatives or texts work better based on numbers.

Store A/B testing – Google Play vs. App Store

Although Google Play A/B tests have their weak points (constant 90% confidence level for example), it still looks better than solutions provided by Apple. Until recently, Apple’s answer to Google’s A/B test tool had been as simple as it can be – nothing. Now we have the ability to work with Creative Testing in Apple Search Ads which is not a full-scale A/B test service and it forces you to deal with ASA, but compared to what we’d had before (yep, Captain Obvious helped me to write this part of article) it’s definitely a big step forward.

Some alternatives to ASA Creative Testing are third party services like Splitmetrics or Storemaven.

Looking at all this stuff, many of us (including me) are forced to deal with the big question. Can I use a successful Google Play A/B test creative for my App Store product page? Although most likely, all of us know that this is not the best idea, and iOS users may not like creatives that were successful on Google Play, it’s tempting to do so, especially if you don’t have the ability or budget for separate App Store testing. Sure, you can upload something to the App Store and wait to see if it works, but that’s always a gamble.

So it happens that in War Robots, we have a case study just on that and we can share some actual numbers about using a successful Google Play A/B test result for your iOS listing. Spoiler – everything is bad.

Case Study: War Robots on the Google Play experiment

It all started with our plan to test the first screenshot for War Robots’ Google Play store listing. This time we decided to show some of the product’s achievements. As a result of this idea, our art team created three screenshots that you can see above.

We really wanted to understand how users all over the globe would react to this new concept, so we ran a series of tests for different localizations: US, Great Britain, Russia, Germany, France, Indonesia, and Vietnam. Soon enough, Google Play came up with these numbers:

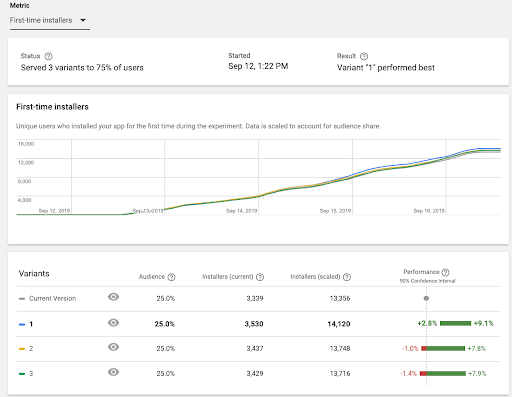

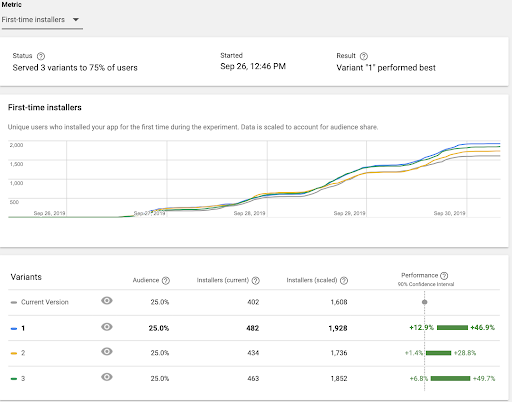

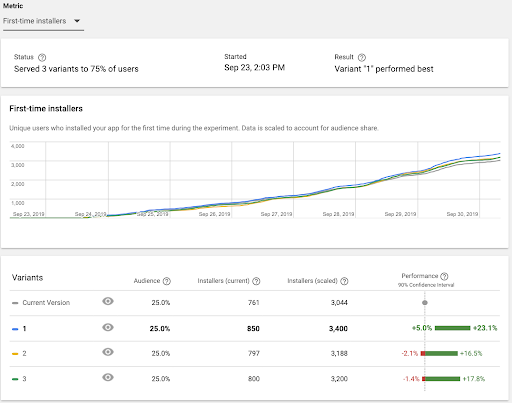

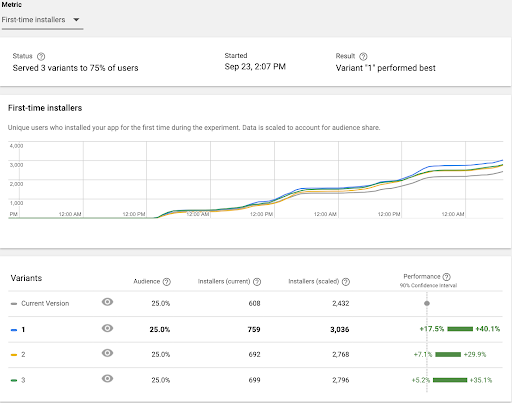

US results:

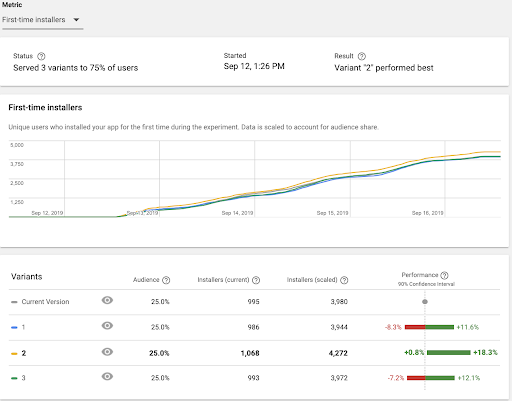

Great Britain results:

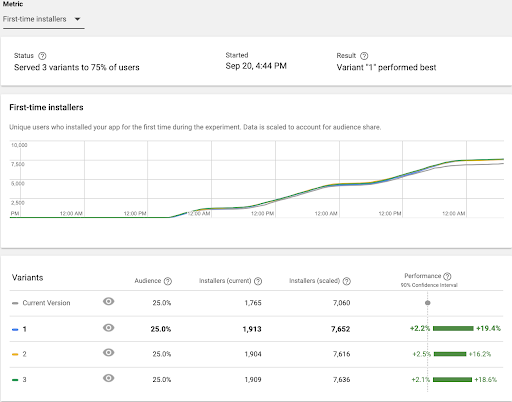

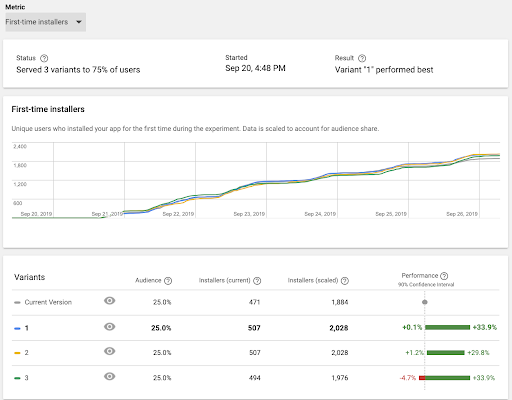

Russia results:

Germany results:

France results:

Indonesia results:

Vietnam results:

Applying the results of the Google Play A/B test to the App Store…

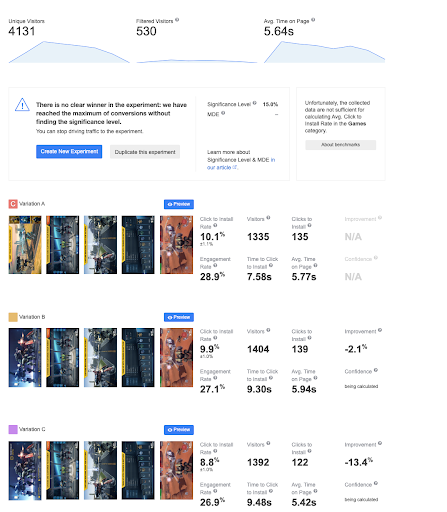

I must say that with results like that, it was really tempting to immediately use them in the App Store, but somehow we decided that we need to run a test first. So the decision was to run a Splitmetrics test with a wide geo-targeting so that we didn’t have to run 7 separate tests. And that is where we found quite a surprise.

Unfortunately, we hit the Splitmetrics limit of conversions, but I think all in all the picture more or less says it all.

As you can see, even a definite success in Google Play, proved in multiple localization, does not mean success in the App Store. No revolutionary conclusions were made here, we again realized that one can’t simply Walk into Mordor apply Google Play test results to the App Store. We just want to share some actual creatives and numbers behind them with all of you.

One last thing to consider here is the fact that (as was said earlier in this article) Splitmetrics only works with paid traffic. This means that if we will upload new creatives to the store it’s possible that organic users will react differently from what we saw in Splitmetrics test with paid traffic only and the overall result may be different. Right targeting settings in advertising companies will ensure that you will get relevant traffic for your test, and therefore relevant results, but there is still room for surprises here.

Maybe this article will help some of you to prove that separate testing for the App Store is a must and get additional resources for that.

What do you think? Do you have any similar experience to share?

About the author

Chingiz Talybov, ASO specialist at Pixonic. Can grumble for hours about the last season of Game of Thrones, or argue about the differences between Irish and Belgium beer. Loves video games, good movies, vinyl, and table tennis.